Intro

A couple of years ago, I had the opportunity to work as a Research Associate on a labour market study in South Africa. Part of this study involved looking for suitable online job vacancies and applying for jobs on behalf of participants.

The process was greatly helped by webscraping scripts we developed.1 And I learned a bit about how to programmatically extract information from websites using R, rvest, and R Selenium.2

Webscraping is about getting the thing you’re seeing on a webpage into a form you can work with on your computer: a dataset, a list, or whatever. It might be single table or multiple tables across many pages; or a combination of elements, like job ads with associated information.

The kind of scraping I’ll discuss below is called “screen scraping” (or HTML parsing).

This is a data extraction and cleaning exercise: getting the content you want from the page HTML. Once you have the content in a form you can work with, it’s standard data cleaning stuff (manipulating strings, dates, &c.3).

In doing this, you’ll be familiarising yourself with the specific website you’re interested in and figuring out where the information you want is, choosing the right CSS-selectors, working with URLs4 and figuring out how to iterate over (or “crawl”) pages.

In more complicated cases, it involves writing a script that can interact with the webpage (by driving a browser), to reveal the content you want. And if you want to build something more sophisticated, it might involve working with a database, a scheduler, and other tools.5 For instance, you might want to build something that extracts information regularly, tracks changes over time and stores it in a database.6

Approach

In the examples below, I extract a table from a Wikipedia.org page (trickier than I thought!), and — more elaborately — extract information about jobs from a site called Gumtree (.co.za).

For the most part, the approach is something like: (a) go to the site you want to scrape, (b) figure out what the things you want to extract are called (find the right CSS-selectors using an inspect tool), (c) use the read_html(), html_nodes(), and other functions in the rvest package to extract those elements, (d) store the results in the format you want to work with (a list, a tibble, &c.), (e) figure out how to iterate (if needed), (f) troubleshoot (handling errors, dealing with broken links, missing selectors, &c.), (g) throughout: lots of Googling.

Considerations

There are a few things to consider before investing time in writing a webscraping script:

- Is this already available? Perhaps it can be downloaded from the site as a spreadsheet already (have a look around), or perhaps the site has an API7 you can access to download data.

- Is there a low effort way of doing this? For instance, maybe you can just copy-paste what you need into a spreadsheet or directly into R8? Or maybe there’s a tool you can use straight-away.9

- How much copy-paste/ “low-effort” work are we talking here? I think writing a simple script can, in many cases, be the lowest effort (and cheapest) of all, but one might hesitate because it feels daunting. If you’ll need to copy-paste multiple things across multiple pages, rather write a script. And if it’s something you plan to do regularly — automate with a script.

- Is it OK for me to do this? This is a trickier question. It depends. And sometimes it’s hard to tell! Things you can do: check if the site has a

robots.txtfile10 (which, if a site has one, includes do’s and don’ts for scrapers/crawlers); perhaps ask yourself “would I feel OK about this information being pulled if this was my website?”; consider what you plan to do with the information you aggregate, and ask yourself if that seems OK. If you decide to scrape — be “polite” about it: don’t overwhelm the site.11 Don’t bother the site more than you need to, i.e. don’t send too many requests to a website per second. You can avoid this by trying to be efficient (don’t repeatedly query the website); include some “rests” in your code, to slow things down.

The front-end of the web

What you see when you open a webpage is brought to you by:

- HTML – basic content and layout semantics (adding structure to raw content)

- CSS – adding styles (formatting, colours, sizes of things, sometimes where things are placed, &c.)

- JavaScript - making things interactive (behaviour of things on the page)

Here is an example on CodePen bringing these together.12

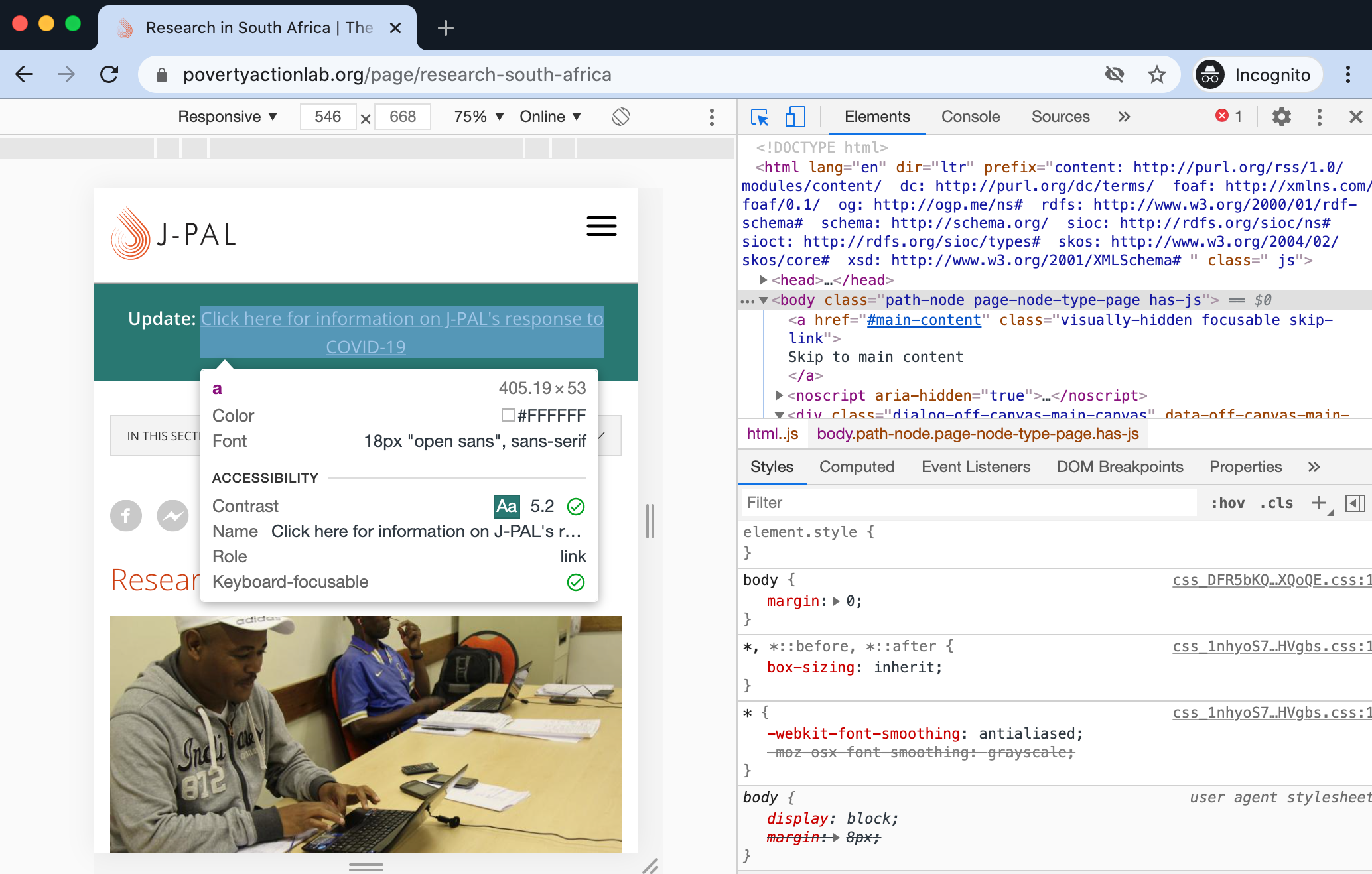

You can also see how these come together by using your browser’s inspect tool. On Chrome you can right click and select “inspect” or go to More Tools/ Developer Tools13.

Here’s what it looks like:

Tools

I use a combination of the inspect tool and SelectorGadget (“point and click CSS selectors”) to identify what the elements I want to extract are called.

I use the rvest R package (“rvest helps you scrape information from webpages”), and a number of tidyverse tools for data manipulation. Code is heavily commented.

Examples

Set up

## Load libraries ----

library(tidyverse) ## for data manipulation (various packages)

library(rvest) ## for scraping

library(textclean) ## some cleaning functions

library(robotstxt) ## to get robotstxt protocols

library(kableExtra) ## for printing out an html table in this postFirst, I check the robots.txt files (see https://www.gumtree.co.za/robots.txt and https://en.wikipedia.org/robots.txt).

Note: these protocols are not easy to interpret. I think the rule of thumb is: be polite.

## Use the robotstxt package to check if a bot has permissions to access page(s)

robotstxt::paths_allowed("https://www.wikipedia.org/") ## should be TRUE> [1] TRUErobotstxt::paths_allowed("https://www.gumtree.co.za/") ## should be TRUE> [1] TRUETable example

In the first example, I extract a table from Wikipedia.

I tried using SelectorGadget and the Chrome inspection tool, but was struggling to figure out the correct selector.

Something you can do (I learned, after some Googling) is to get all tables on the page; then you can identify which one is the right one using string matching (e.g. the grep() function).

## Get Table element from Wikipedia ----

website <- read_html("https://en.wikipedia.org/wiki/Elections_in_South_Africa") ## get the website HTML

table <- website %>% html_nodes("table") ## all tables

length(table) ## how many tables?> [1] 19data <- html_table(table[grep("ANC", table)], fill = T)[[1]]

glimpse(data) ## let's see> Rows: 55

> Columns: 8

> $ Party <chr> "list", "list", "list", "list", "list", "list", "list", "list",…

> $ Party <chr> "", "", "", "", "", "", "", "", "", "", "", "", "", "", "", "",…

> $ Party <chr> "ANC", "Democratic Alliance", "Economic Freedom Fighters", "IFP…

> $ Votes <chr> "10,026,475", "3,621,188", "1,881,521", "588,839", "414,864", "…

> $ `%` <chr> "57.50", "20.77", "10.79", "3.38", "2.38", "0.84", "0.45", "0.4…

> $ `+/−` <chr> "4.65", "1.36", "4.44", "0.98", "1.48", "0.27", "0.55", "New", …

> $ Seats <chr> "230", "84", "44", "14", "10", "4", "2", "2", "2", "2", "2", "2…

> $ `+/−` <chr> "19", "5", "19", "4", "6", "1", "2", "New", "New", "4", "1", "1…head(data) ## great!> Party Party Party Votes % +/− Seats +/−

> 1 list ANC 10,026,475 57.50 4.65 230 19

> 2 list Democratic Alliance 3,621,188 20.77 1.36 84 5

> 3 list Economic Freedom Fighters 1,881,521 10.79 4.44 44 19

> 4 list IFP 588,839 3.38 0.98 14 4

> 5 list Freedom Front Plus 414,864 2.38 1.48 10 6

> 6 list ACDP 146,262 0.84 0.27 4 1Job posts

This is my main example: getting job information from a site called Gumtree.co.za.14

Things to note here, after inspecting the page: descriptions are truncated, and contact information is hidden. To reveal them, you need to click on these elements. We’re not going to get into headless browsers, and how to get your script to interact with page elements, but keep in mind that this is possible.

There are multiple postings on each page, and each one links to a full ad.

I extract the links on the first page (each title links to a full ad):

## reading in the HTML from this page on entry-level jobs

jobs <- read_html("https://www.gumtree.co.za/s-jobs/v1c8p1?q=entry+level")

## extract links on first page

links <-

jobs %>%

html_nodes(".related-ad-title") %>% ## identify the "title" element

html_attr("href") ## get the post link

head(links) ## great!> [1] "/a-logistics-jobs/eastern-pretoria/junior-warehouse-controller-+-pta-non+ee/1008328733720911316948209"

> [2] "/a-internship-jobs/durbanville/are-you-young-and-unemployed-are-you-looking-for-an-opportunity/1008463343470912436560209"

> [3] "/a-clerical-data-capturing-jobs/somerset-west/permanent-admin-assistant-financial-services/1008560434300912467960009"

> [4] "/a-hr-jobs/city-centre/recruitment-officer/1008558338510910255520809"

> [5] "/a-hr-jobs/city-centre/human-resources-assistant/1008558343790910255520809"

> [6] "/a-office-jobs/brackenfell/office-assistant-brackenfell-r8-000-+-r9-000-pm/1008557939220911146640309"I decided to extract the job title, description, job type, employer, location, and to include a time-stamp. I used the inspect tool to identify each element’s CSS-selector.

(There’s much more information on this page than the few elements I chose; the approach would be the same.)

## first link

jobs_1 <- read_html(paste0("https://www.gumtree.co.za", links[1]))

jobs_1> {html_document}

> <html data-locale="en_ZA" lang="en" xmlns="http://www.w3.org/1999/html" class="VIP">

> [1] <head>\n<meta name="csrf-token" content="466ea01ccbcacd284aa5a9080a1099e1 ...

> [2] <body class="VIP">\n<noscript><iframe src="//www.googletagmanager.com/ns. ...## get the job title

title <- jobs_1 %>%

html_nodes("h1") %>%

html_text() %>%

replace_html() %>% ## strip html markup (if any)

str_squish() ## get rid of leading and trailing whitespace

title> [1] "Junior Warehouse Controller - PTA (Non-EE)"This worked.

Since I want to do this for a few more things — title, description, &c., I code a function to make this easier, and to avoid repeating myself too much:

## function for cleaning single elements ----

clean_element <- function(page_html, selector) {

output <- page_html %>%

html_nodes(selector) %>%

html_text() %>%

replace_html() %>% ## strip html markup (if any)

str_squish() ## remove leading and trailing whitespace

output <- ifelse(length(output) == 0, NA, output) ## in case an element is missing

return(output)

}This works (and I can always add a few more things in the function if I wanted to).

## let's try out the function!

## I got the CSS-selectors with SelectorGadget

description <- clean_element(jobs_1, ".description-website-container")

jobtype <- clean_element(jobs_1, ".attribute:nth-child(4) .value")

employer <- clean_element(jobs_1, ".attribute:nth-child(3) .value")

location <- clean_element(jobs_1, ".attribute:nth-child(1) .value")

time <- Sys.time()

## putting my results into a tibble (see "https://r4ds.had.co.nz/tibbles.html")

dat <-

tibble(

title,

description,

jobtype,

location,

time

)

dat ## looks good!> # A tibble: 1 x 5

> title description jobtype location time

> <chr> <chr> <chr> <chr> <dttm>

> 1 Junior Ware… DescriptionAn establis… Non EE… Eastern Pret… 2020-12-16 14:22:18What if I wanted to do this for all job posts on a page?

Iteration

I write another function, which will create a tibble15 for a job post, with the information I want. I can then use a for loop to apply the function to each job post and to put the result into a list of tibbles.

After I have a list of tibbles, I can combine them into a single dataset using dplyr’s bind_rows().

## function for cleaning all elements I want on a page ----

clean_ad <- function(link) {

page_html <- read_html(link) ## get HTML

## the list of items I want in each post

title <- clean_element(page_html, "h1") ## parse HTML

description <- clean_element(page_html, ".description-website-container")

jobtype <- clean_element(page_html, ".attribute:nth-child(4) .value")

employer <- clean_element(page_html, ".attribute:nth-child(3) .value")

location <- clean_element(page_html, ".attribute:nth-child(1) .value")

time <- Sys.time() ## current time

## I put the selected post info in a tibble

dat <-

tibble(

title,

description,

jobtype,

location,

time

)

return(dat)

}## set up ----

## fixing" link addresses

links <- paste0("https://www.gumtree.co.za", links)

links <- sample(links, 10) ## sampling 10 random links for the example

## I create a list (for storing tibbles), and a for loop to iterate through links

output <- list()

## iterating through links with a for loop ----

for (i in 1:length(links)) {

deets <- clean_ad(links[i]) ## my function for extracting selected content from a post

output[[i]] <- deets ## add to list

Sys.sleep(2) ## rest

}

## combining all tibbles in the list into a single big tibble

all <- bind_rows(output) ## fab!

glimpse(all)> Rows: 10

> Columns: 5

> $ title <chr> "Handyman /driver /farmhand", "Permanent Admin Assistant …

> $ description <chr> "DescriptionHandyman /driver /farmhand. An entry level jo…

> $ jobtype <chr> NA, NA, NA, NA, NA, NA, "Non EE/AA", NA, "EE/AA", NA

> $ location <chr> "Howick, Midlands", "Somerset West, Helderberg", "Inner C…

> $ time <dttm> 2020-12-16 14:22:19, 2020-12-16 14:22:22, 2020-12-16 14:…all$description <- str_trunc(all$description, 100, "right") ## truncate

all$description <- sub("^Description", "", all$description)

## I'm using kable to make an html table for the site

all %>%

kable() %>%

kable_paper(bootstrap_options = c("striped", "responsive"))| title | description | jobtype | location | time |

|---|---|---|---|---|

| Handyman /driver /farmhand | Handyman /driver /farmhand. An entry level job live in on farm. Please whatsapp 082453… | NA | Howick, Midlands | 2020-12-16 14:22:19 |

| Permanent Admin Assistant (Financial Services) | The admin assistant is primarily responsible for performing after sales service offeri… | NA | Somerset West, Helderberg | 2020-12-16 14:22:22 |

| Are you young and unemployed. Are you looking for an opportunity. | Harambee is a 100% free opportunity for young South Africans who are unemployed and me… | NA | Inner City / CBD&Bruma, Johannesburg | 2020-12-16 14:22:24 |

| Vodacom Direct Sales and Marketing | Real Promotions is recruiting for Vodacom Sales Representatives within the Cape Town … | NA | De Waterkant, Cape Town | 2020-12-16 14:22:27 |

| Are you young and unemployed. Are you looking for an opportunity. | Harambee is a 100% free opportunity for young South Africans who are unemployed and me… | NA | Eastern Pretoria, Pretoria / Tshwane | 2020-12-16 14:22:30 |

| Are you young and unemployed. Are you looking for an opportunity. | Harambee is a 100% free opportunity for young South Africans who are unemployed and me… | NA | Randburg, Johannesburg | 2020-12-16 14:22:32 |

| Junior Warehouse Controller - PTA (Non-EE) | An established logistics company in Pretoria East will employ a Junior Warehouse Contr… | Non EE/AA | Eastern Pretoria, Pretoria / Tshwane | 2020-12-16 14:22:35 |

| Property Accountant | Leading Property Development Group, based in the Northern Suburbs of Cape Town, is loo… | NA | Bellville, Northern Suburbs | 2020-12-16 14:22:38 |

| Building and civil Internship entry level position | We have a position for a building/civil internship You should have a reasonable amount… | EE/AA | Umbilo, Durban City | 2020-12-16 14:22:41 |

| Human Resources Assistant | The HYPERCHECK Group has an exciting opportunity for a Human Resources Assistant at th… | NA | City Centre, Durban City | 2020-12-16 14:22:43 |

Nice!

How would one approach multiple pages?

One (hacky) approach is to use the URL and another for loop.16

In the example below, I extract the job links for the first 3 pages:

## set-up ----

pages <- 3 ## let's just do 3

job_list <- list()

link <- "https://www.gumtree.co.za/s-jobs/page-" ## url fragment

## iterate over pages ----

for (i in 1:pages) {

jobs <-

read_html(paste0(link, i, "/v1c8p", i, "?q=entry+level")) ## using paste0

links <-

jobs %>%

html_nodes(".related-ad-title") %>%

html_attr("href") ## get links

job_list[[i]] <- links ## add to list

Sys.sleep(2) ## rest

}

links <- unlist(job_list) ## make a single list

links <- paste0("https://www.gumtree.co.za", links)

head(links, 10) ## looks good!> [1] "https://www.gumtree.co.za/a-logistics-jobs/eastern-pretoria/junior-warehouse-controller-+-pta-non+ee/1008328733720911316948209"

> [2] "https://www.gumtree.co.za/a-internship-jobs/durbanville/are-you-young-and-unemployed-are-you-looking-for-an-opportunity/1008463343470912436560209"

> [3] "https://www.gumtree.co.za/a-clerical-data-capturing-jobs/somerset-west/permanent-admin-assistant-financial-services/1008560434300912467960009"

> [4] "https://www.gumtree.co.za/a-hr-jobs/city-centre/recruitment-officer/1008558338510910255520809"

> [5] "https://www.gumtree.co.za/a-hr-jobs/city-centre/human-resources-assistant/1008558343790910255520809"

> [6] "https://www.gumtree.co.za/a-office-jobs/brackenfell/office-assistant-brackenfell-r8-000-+-r9-000-pm/1008557939220911146640309"

> [7] "https://www.gumtree.co.za/a-general-worker-jobs/somerset-west/permanent-administration-assistant/1008459525670911102256409"

> [8] "https://www.gumtree.co.za/a-logistics-jobs/paarl/permanent-jnr-logistics-coordinator/1008548513350911102256409"

> [9] "https://www.gumtree.co.za/a-fmcg-jobs/durbanville/storeman-pharmaceutical-fmcg/1008551592230911341410109"

> [10] "https://www.gumtree.co.za/a-accounting-finance-jobs/paarl/permanent-jnr-financial-accountant/1008548473800911102256409"Things to think about

Make sure your script can handle errors17:

- CSS-selectors change over time (if you’re planning to run your script again in future, you should check)

- links stop working (page names change, or they stop existing)

- not all pages are necessarily the same (some elements may be missing from some pages) — see the “job type” variable above, for instance

- sites time out (that can break your script if you don’t account for it)

- the script might take a long time to run; how can you track progress?

- what other things might break your script?

Putting it all together

Approach: One function to clean an individual element; One function to clean an individual post (clean multiple elements and store the result as a tibble); One loop to extract links to individual posts over multiple pages and to store these in a list; One loop to iterate over a list of links, to apply the post cleaning function, and to store the result in a list (a list of tibbles); finally, to collapse the list of tibbles into a single data set, and to export to your chosen location.

Bells and whistles: I use the purrr package’s possibly() function to deal with errors (so my script doesn’t break when a link is broken); I use the progress package to show me, in my console, how far along the scraping is.

## Libraries ----

library(tidyverse) ## for data manipulation (various packages)

library(rvest) ## for scraping

library(textclean) ## some cleaning functions

library(progress) ## for tracking progress in console

## Function 1: Clean an element ----

clean_element <- function(page_html, selector) {

output <- page_html %>%

html_nodes(selector) %>%

html_text() %>%

replace_html() %>% ## strip html markup (if any)

str_squish() ## remove leading and trailing whitespace

output <- ifelse(length(output) == 0, NA, output) ## in case an element is missing

return(output)

}

## Function 2: Clean an ad ----

clean_ad <- function(link) {

page_html <- read_html(link) ## get HTML

## the list of items I want in each post

title <- clean_element(page_html, "h1") ## parse HTML

description <- clean_element(page_html, ".description-website-container")

jobtype <- clean_element(page_html, ".attribute:nth-child(4) .value")

employer <- clean_element(page_html, ".attribute:nth-child(3) .value")

location <- clean_element(page_html, ".attribute:nth-child(1) .value")

time <- Sys.time() ## current time

## I put the selected post info in a tibble

dat <-

tibble(

title,

description,

jobtype,

location,

time

)

return(dat)

}

## Loop 1: Get my links ----

pages <- 5 ## let's just do 5

job_list <- list()

link <- "https://www.gumtree.co.za/s-jobs/page-" ## url fragment

for (i in 1:pages) {

jobs <-

read_html(paste0(link, i, "/v1c8p", i)) ## using paste0

links <-

jobs %>%

html_nodes(".related-ad-title") %>%

html_attr("href") ## get links

job_list[[i]] <- links ## add to list

Sys.sleep(2) ## rest

}

links <- unlist(job_list) ## make a single list

links <- paste0("https://www.gumtree.co.za", links)

## Trouble shooting ----

links <- sample(links, 20) ## sampling 20 (comment this out for more)

head(links) ## looks good!

links <- c(links, "ww.brokenlink.co.bb") ## append faulty link to test error handling

## Let's go! ----

## track progress

total <- length(links)

pb <- progress_bar$new(format = "[:bar] :current/:total (:percent)", total = total)

## error handling

safe_clean_ad <- possibly(clean_ad, NA) ## see purrr package

## list

output <- list()

## the loop

for (i in 1:total) {

pb$tick()

deets <- safe_clean_ad(links[i])

output[[i]] <- deets # add to list

Sys.sleep(2) ## resting

}

## combining all tibbles in the list into a single big tibble

all <- output[!is.na(output)] ## remove empty tibbles, if any

all <- bind_rows(all) ## Fab!

glimpse(all)

write_csv(all, "myfile.csv") ## exportResources

- Mine Dogucu & Mine Çetinkaya-Rundel (2020): “Web Scraping in the Statistics and Data Science Curriculum: Challenges and Opportunities”, Journal of Statistics Education (fyi, see their use of

purrr’smap_dfr()function!) - How we learnt to stop worrying and love web scraping | Nature Career Column by Nicholas J. DeVito, Georgia C. Richards & Peter Inglesby

- Chapter 12: “Web scraping” in “Automate the Boring Stuff with Python” by Al Sweigart

- Headless browing: R Bindings for Selenium WebDriver • rOpenSci: RSelenium

- Ethics/best practice

- More implementation

- String manipulation

- See also

The process involved both webscraping, and a fair amount of manual searching!↩︎

See R for Data Science’s chapters on dates and strings; then: (1) Simple, Consistent Wrappers for Common String Operations • stringr, (2) GitHub - trinker/textclean: Tools for cleaning and normalizing text data, (3) Quantitative Analysis of Textual Data • quanteda (text analysis)↩︎

See Julia Evans’ “how URLs work”; also see Oliver Keyes & Jay Jacobs’ “urltools” R package — not something I’m getting into, but I think working with URLs programmatically is the way to go!↩︎

Have a look at Heroku and their “Hobby-dev” PostgreSQL with the fee Heroku scheduler↩︎

A bit different, but Simon Willison’s Git scraping: track changes over time by scraping to a Git repository looks very interesting↩︎

See, for instance, Octoparse or webscraper.io. Also see, Google Sheets’

importHTMLfunction (see the documentation here or see this Martin Hawksey’s tutorial here (looks good)↩︎See here: “The robots.txt file must be located at the root of the website host to which it applies […] http://www.example.com/robots.txt”. See also: https://en.wikipedia.org/wiki/Robots_exclusion_standard then, there’s helpful information in Peter Meissner’s

robotstxtR package documentation↩︎See https://www.internetingishard.com/html-and-css/introduction/ and https://www.w3schools.com/html/html_intro.asp↩︎

https://developers.google.com/web/tools/chrome-devtools/open↩︎

I wrote a short version of this example here: https://www.csae.ox.ac.uk/coders-corner/coders-corner↩︎

There are better ways of working with URLs: https://github.com/Ironholds/urltools↩︎

See

safely()andpossibly()from the purrr package (or “trycatch” from base R)↩︎